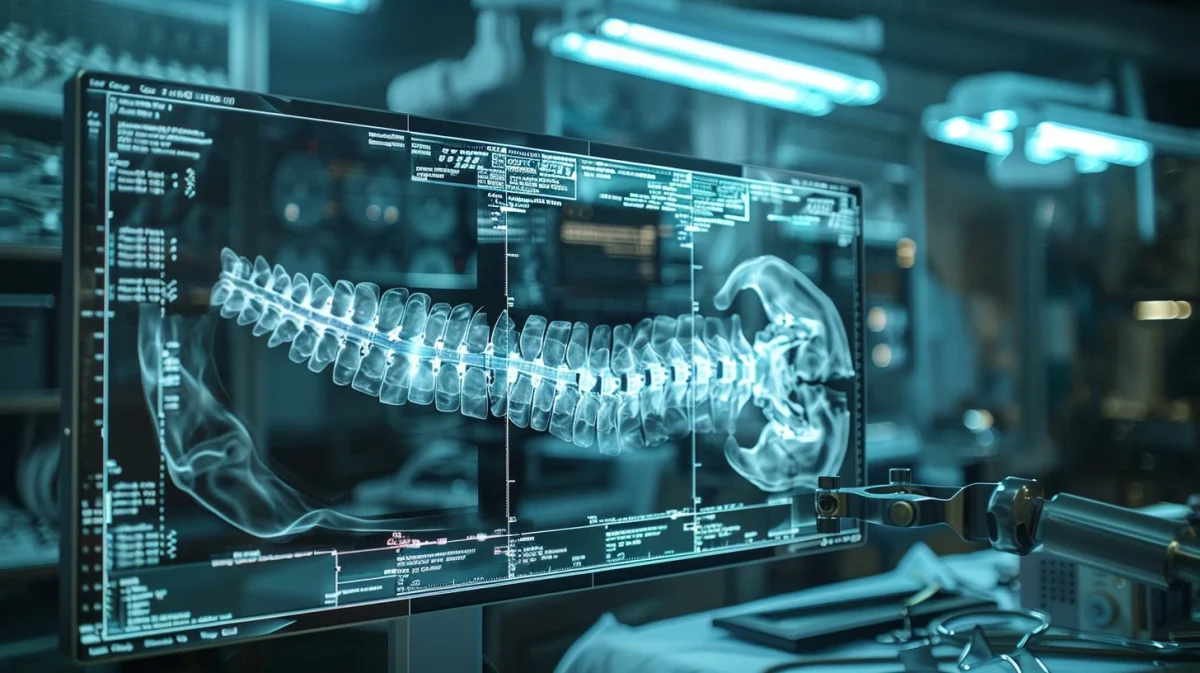

Patient diagnosis and disease prediction data using AI can diagnose diseases earlier and predict potential health issues, allowing for proactive medical interventions before they become life-threatening. Although current medical professionals are highly accurate and tend to identify these health concerns, artificial intelligence is far more accurate, and since this may save lives, every bit of precision matters. This is because these algorithms excel at recognizing complex patterns and relationships within large datasets, including medical records, genetic information, and clinical data. This allows artificial intelligence to identify even the most subtle indicators of disease or risk factors that may not be immediately apparent to human clinicians. In addition to this, AI can analyze these vast amounts of patient data in a fraction of the time it would take a human.

This speed and efficiency enable earlier detection of diseases and timely intervention, potentially improving patient outcomes. For example, if an artificial algorithm can identify a cancer in its early stages, the patient can receive immediate and necessary treatment beforethe disease develops, possibly saving their lives or at least saving them a few trips to the doctor. Similar to medical imaging, artificial intelligence algorithms are not susceptible to bias, subjectivity, variations in interpretation, and fatigue. They are able to provide a consistent and objective analysis, therefore reducing the likelihood of diagnostic errors or disparities in health care outcomes. Even though these algorithms show much promise, some challenges and limitations may arise and associate themselves with the artificial intelligence.

Because AI does not possess free-will or independent thought, it relies only on the quality and accurateness of the data it has been trained on. Biases that may be present in the data, such as underrepresentation of certain demographics or healthcare disparities, can then lead to biased predictions and exacerbate the current existing inequalities in healthcare. This problem has become apparent to many as these deep learning models, often used in AI for diagnosis, are often referred to as “black boxes” because of their irregular decision-making process that is not easily interpretated by humans. Even if the data or solutions provided by AI are in fact helpful, if the doctor can not understand them then they will not be put into action. This lack of explainability has hindered trust and acceptance of AI-driven diagnostic tools by both healthcare professionals as well as their patients.

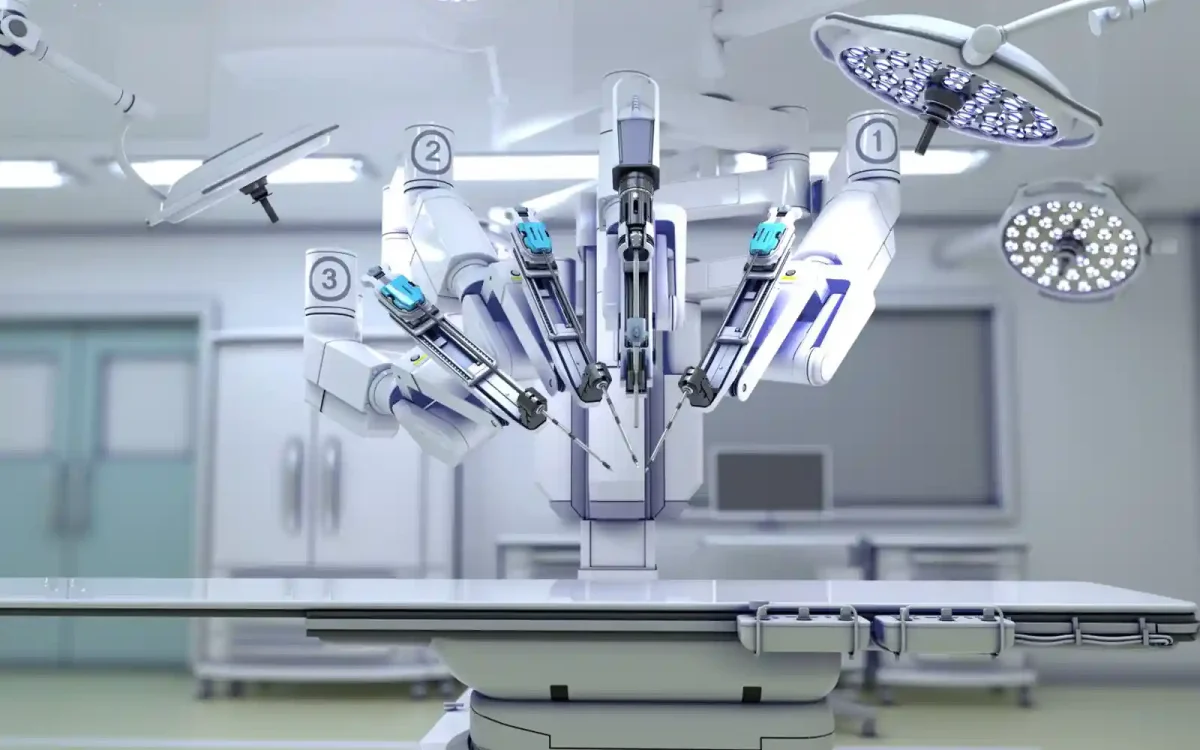

Furthermore, AI may struggle with understanding the broader clinical context of a patient’s condition, including factors such as medical history, co-morbidities, and patient preferences. incorporating this contextual understanding into AI algorithms remains a challenge. Like any diagnostic tool, AI algorithms are prone to errors, possibly less than humans, but errors nonetheless. These errors can include false positives (incorrectly identifying a condition) and false negatives (failing to identify a condition). These errors could induce significant consequences for patient care and may require human oversight and validation. The deployment of AI in healthcare raises many regulatory and legal considerations, such as issues related to liability, accountability, and compliance with medical regulations and standards. Even though the information and data that has been gathered about artificial intelligence is substantial, there are still some aspects and possible concerns that are not known. Despite this, AI shows great potential to complement human expertise in diagnosis and disease prediction, leading to more accurate, timely, and personalized healthcare interventions.

Continued research, validation, and collaboration between AI developers, healthcare providers, and regulatory bodies are vital in order to properly address these challenges and ultimately harness the full potential of AI in improving healthcare outcomes.